Reclaim AI – Back-To-The-Humans? – what did we learn?

“Artificial intelligence, robots and strange things” was how Wendy Grossman, a technology journalist and the author of ‘Net.wars’, opened up the last Cybersalon discussion.

Even though robots and artificial intelligence seem a modern notion, humans have always dreamt of and tried to create machines that would make their lives easier. As Wendy pointed out, one of the oldest examples comes from ancient Greece, in the form of a human-like “robot” mixing and pouring drinks. While in the past such inventions were undoubtedly “a rich person’s toy’, the advent of AI has meant that technology is nowadays more democratised. Even though many of us would be content with just a barman-robot, the mere multiplicity of roles the future machines will perform and their empowerment mean that we should give serious consideration to issues such as oversight.

Even though robots and artificial intelligence seem a modern notion, humans have always dreamt of and tried to create machines that would make their lives easier. As Wendy pointed out, one of the oldest examples comes from ancient Greece, in the form of a human-like “robot” mixing and pouring drinks. While in the past such inventions were undoubtedly “a rich person’s toy’, the advent of AI has meant that technology is nowadays more democratised. Even though many of us would be content with just a barman-robot, the mere multiplicity of roles the future machines will perform and their empowerment mean that we should give serious consideration to issues such as oversight.

Bill Thompson, a leading technology writer and broadcaster at BBC Click, kicked off a debate on ethics and robotics by arguing against the Asimov’s Laws of Robotics.

He supported his stance with a short clip from the Channel 4 series ‘Humans’, which showed a human-like robot being subjected to merciless emotional control by his creator. The master was seen continuously manipulating Fred, the robot, by telling the machine to strangle him, only to invoke his unfaltering loyalty seconds later. Fred, whose face betrayed a profound sense of despair despite his robot-like expression, could be heard asking: “Professor, what have you done to me?”, and then seemingly answering his own question: “You trap us in our own minds, give us feelings but take away free will.”

Bill admitted that it was that very moment in the TV series, and in particular the robot’s facial expression, that led him to take the view that it is “morally indefensible” to assign consciousness to a machine and simultaneously control its actions by taking away its free will. Therefore, the ethical imperative would be to disapply Asimov’s laws to any future creations equipped with consciousness, even though some of the robots might not resemble humans in any way due to the variety of roles they will perform.

Bill, who studied psychology and philosophy at Cambridge in mid-80s where was taugt by, among others, Geoffrey Hinton, admitted that he favours leaving machines with agency ‘to their own devices’, unlike Wendy, who considers herself a biological supremacist. She challenged him on why automated sex machines, also portrayed in the ‘Humans’ series, do not involve the same moral dilemma, as if the ‘female’ robots’ services were just a matter of computing powers and not a question of ethics. Bill responded by saying that the sex robots in ‘Humans’ had not been given the same level of consciousness.

Bill, who studied psychology and philosophy at Cambridge in mid-80s where was taugt by, among others, Geoffrey Hinton, admitted that he favours leaving machines with agency ‘to their own devices’, unlike Wendy, who considers herself a biological supremacist. She challenged him on why automated sex machines, also portrayed in the ‘Humans’ series, do not involve the same moral dilemma, as if the ‘female’ robots’ services were just a matter of computing powers and not a question of ethics. Bill responded by saying that the sex robots in ‘Humans’ had not been given the same level of consciousness.

Interestingly, the audience reacted with disapproval to the idea of having sex with a robot, possibly because the presence of a number of other human beings in the room might have made honesty a bit hard to come by.

Dr Satinder Gill, an AI expert, psychologist and editor of ‘AI & Society‘ journal, drew upon the observations made in her book ‘Tacit Engagement‘, published on the very day of the Cybersalon event. She spoke about her research into how humans share things that are deeply tacit, and how we can extract and use that knowledge in AI.

Thanks to technology, people are able to display different personas that are not their true selves, a process that is facilitated by the fact that our communication often does not happen in the same physical space. This, according to Satinder, raises important questions about the ethics of our behaviour.

Satinder used a short video of an experiment to illustrate how much tacit knowledge is involved in the mere act of greeting someone. The video showed strangers coming into a room, two at a time, walking across the room and then stopping to greet each other. Satinder argued that people who share the same culture are more likely to synchronise their greeting and perform corresponding movements at the same time, thanks to the shared tacit knowledge.

Martin Smith attempted to divert attention from the fact he was supposed to bring a robot with him by saying many of us humans are already partly robotic. Since a number of people carry within themselves devices such as pacemakers or artificial hearts, we are getting closer to robots, just as robots are getting closer to us.

Smith, who is the Professor of Robotics at Middlesex University and President of the UK Cybernetics Society, argued that robots will far outstrip us in intelligence. They already have 20-30 senses that we simply can’t have, ranging from laser, radar and GPS. While the speed of nerves down our nerve cells is 100 metres per second, robots have 3 million times advantage, with any signal in a robot travelling at half the speed of light, which is around 186 000 miles per second.

Martin emphasised that, although we like to think about ourselves as complex and special, we share a fair chunk of our DNA with other species. Therefore, describing ourselves simply as clever bacteria would be more apt. Even though robots, with all the extraordinary abilities they’re bound to develop, will outstrip us in the future, they won’t have had to gone through billions of years of evolution as humans have done. As a result, they will always remain different to us, despite all the similarities that we might award them.

Martin also argued that we should not let the governments, which tend to be both corrupt and scientifically-illiterate, to stifle progress in the AI field. He favours the view that technology should be allowed to develop unbounded and the scientific community would be required to come up with a solution to any mishaps only once they occur.

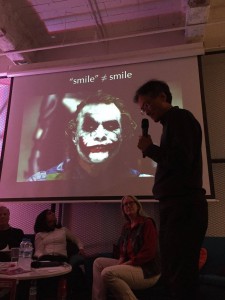

Dr Sha Xin-Wei, mathematician, computer scientist, and artist-researcher, spoke about how we have been ascribing human features to non-humans for centuries, for instance while playing pretend with children using non-animate objects. He argued that a clown, a figure that most of us associate with entertainment, is in fact doomed to tragic existence due to the fact that we have given him a permanent smile, no matter what his inner feelings might be.

Sha also elaborated on the notion of gear world. While all gears work perfectly together, it only takes one small change to affect all the other ones. He argued that, the more perfect and interconnected the world becomes, the amount of energy needed to change anything goes into infinity.

Sha’s work on context evokes the Kuleshov Effect . He argued that while humans are able to interpret context using perception, for instance by linking neutral facial expressions and an empty plate with hunger, robots, however smart, will not have the same capacity.

Echoing Bill Thompson’s sentiment, we are now going through a period of rapid development in the AI field. While it is important that we take advantage of it and let it flourish, it’s equally vital to put a system of oversight in place in order to avoid technology being used for unethical purposes. To avert the danger of government officials making politically-motivated decisions, engineering, philosophy and science peers should be put in charge of regulation, ensuring continuous technological progress that nevertheless fits within a moral framework.